YouTube releases data on removal of rules-breaking videos - It's in millions

YouTube has released data that suggests that it is getting better at spotting and removing videos that break its rules against disinformation, hate speech and other banned content.

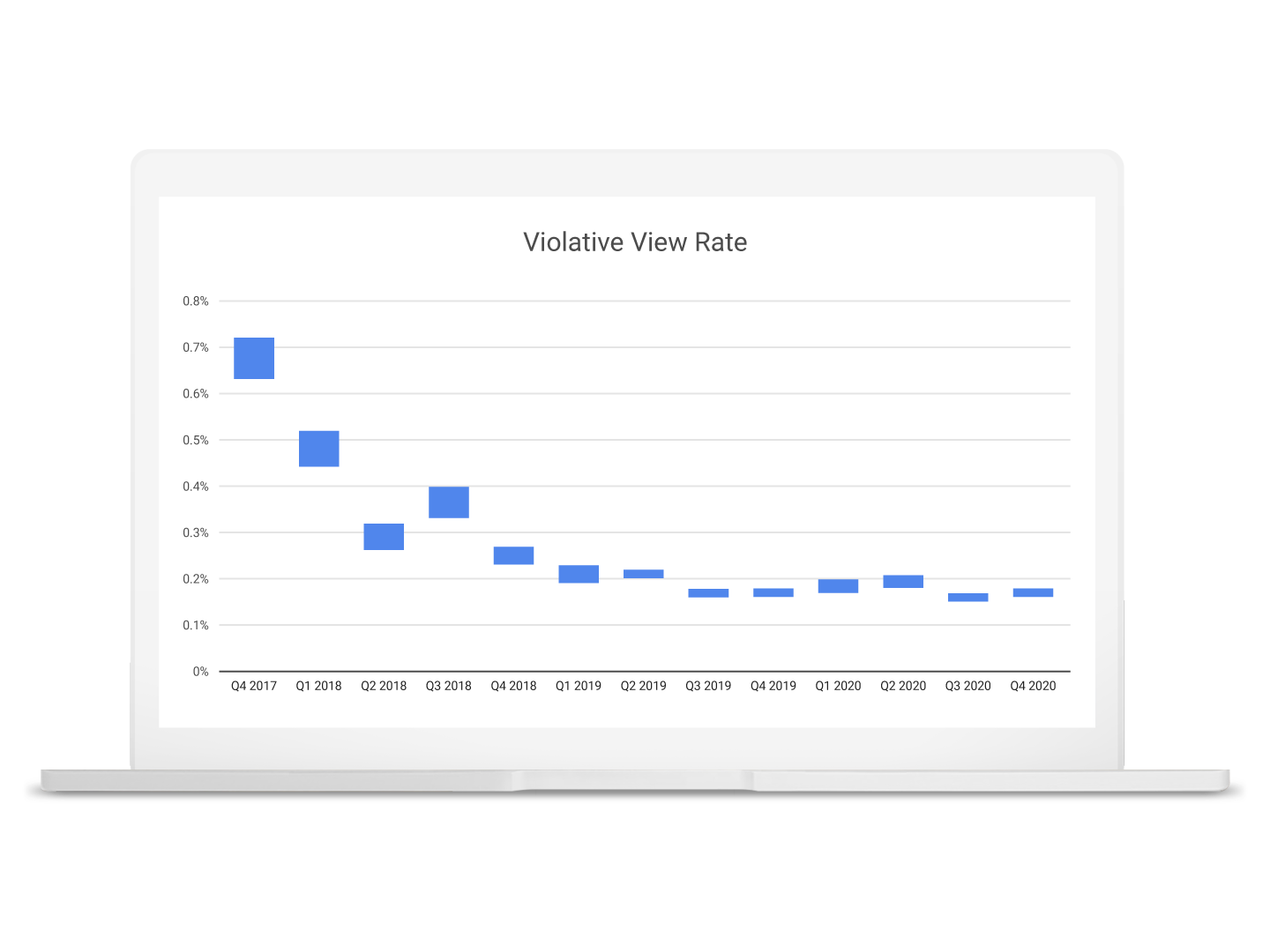

The world's most popular video platform said 0.16% to 0.18% of all the views on it in the fourth quarter of 2020 were on content that broke its rules --- that is, out of every 10,000 views on YouTube, 16-18 come from violative content. That’s down 70% from the same period in 2017, the year the company began tracking it.

The catch though here is the content has to violate YouTube's rules to trigger this detection, which, in many cases, doesn't cover all bases.

Also, considering the huge scale of YouTube's total viewing, how much people are actually watching the misleading, dangerous, hateful or offensive content is difficult to gauge.

YouTube, by most estimates, has more than 2 billion monthly users and more than 500 hours of video uploaded to it every minute.

- How to change your YouTube password or reset it

- Check out the best YouTube downloader

- YouTube mobile app gets interesting, fun features

Over 83 million YouTube videos removed so far

The stats on violative content comes as part of YouTube's Community Guidelines Enforcement Report, an in-depth look at all of the content that gets removed from YouTube for violating policies.

YouTube's Community Guidelines Enforcement Report was announced in 2018 to ensure accountability with regards to the content that's allowed on the platform.

Since launching this report, YouTube has removed over 83 million videos and 7 billion comments for violating our Community Guidelines.

The latest metric, released for the first time and named Violative View Rate (VVR), is YouTube's way of saying that its efforts at push-back of problematic content are paying dividends.

"We’re now able to detect 94% of all violative content on YouTube by automated flagging, with 75% removed before receiving even 10 views," YouTube said in a blogpost.

It's a work in progress

The Community Guidelines Enforcement Report documents the clear progress made since 2017, but we also recognize our work isn't done, YouTube added.

"It’s critical that our teams continually review and update our policies, work with experts, and remain transparent about the improvements in our enforcement work. We’re committed to these changes because they are good for our viewers, and good for our business—violative content has no place on YouTube."

YouTube did not spell out the kinds of policy violations that are getting seen before videos are yanked off from the platform.

YouTube, like other social media platforms, has to wrestle with the problem of balancing freedom of expression with effective policing of hateful and misleading content.

from TechRadar - All the latest technology news https://ift.tt/3uuR3BC

Comments

Post a Comment